Notes from LessOnline 2025

At the end of May, I flew to Berkeley to attend LessOnline, an annual meetup of bloggers and other personalities associated with lesswrong.com and the Rationalist subculture. I had just walked across the stage at Harvard’s commencement ceremony to get my PhD diploma, and I was ready to stop worrying about federal science funding cuts and start worrying about existential risk.

This was my first time at LessOnline, since last year it conflicted with a conference on meiosis. I’m happy to say it was a great experience, and I wish I had skipped the meiosis conference and gone last year.1

A burning itch to know

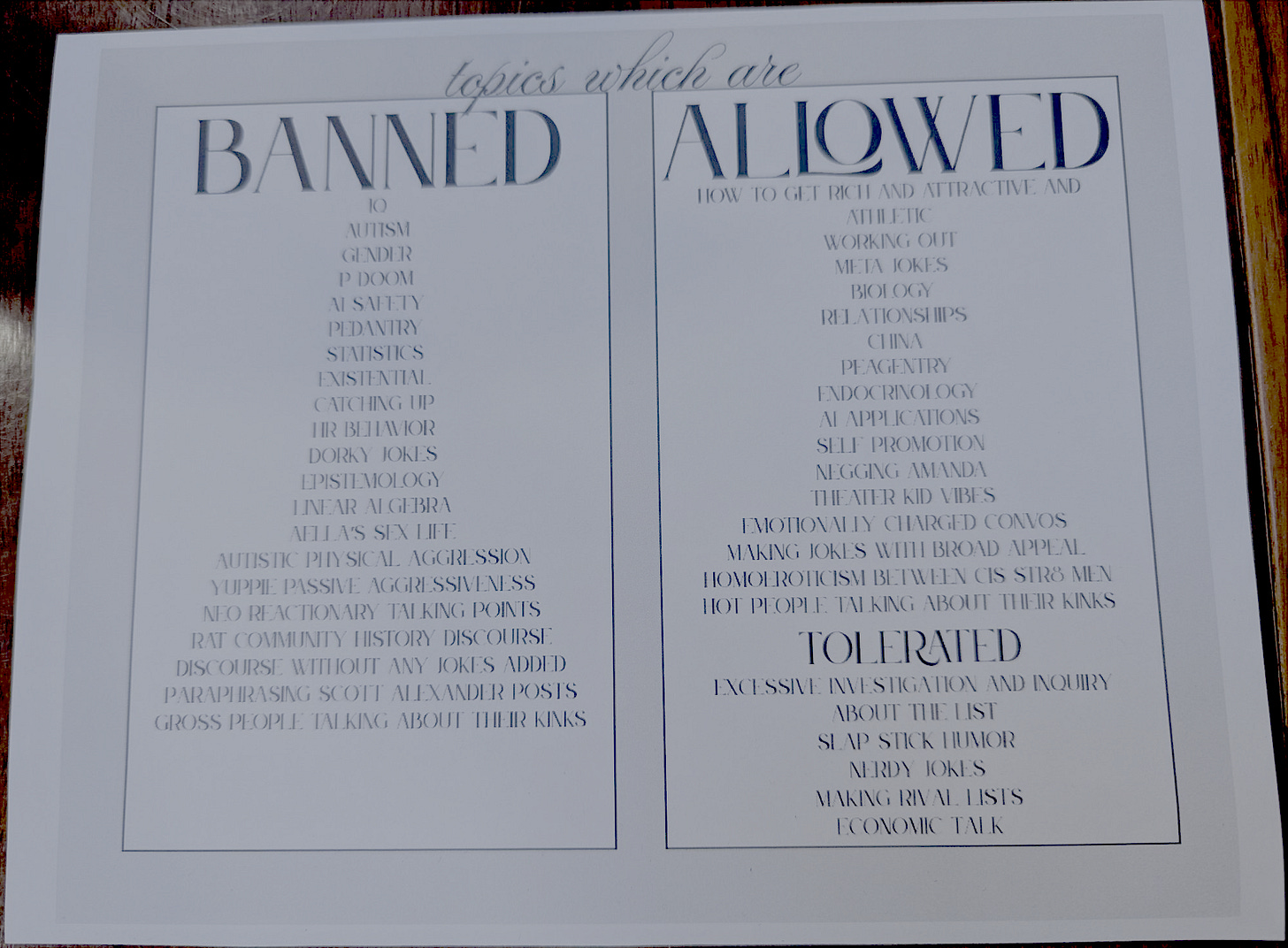

LessOnline was hosted at Lighthaven, a former hotel in Berkeley converted into a venue for events. The vibe of the space reminded me a lot of the MIT campus – a place where nerds can be themselves without feeling cringe about it, where it’s cool to write linear algebra or Elvish on the walls.

At the opening session, one of the organizers asked us to think of a word that characterized the people there. What I thought of was “curiosity”. It seemed that we all had a desire to know more about the world, not just because knowledge is power, but because knowing was interesting in itself.

I didn’t realize it at the time, but apparently curiosity is the First Virtue of Rationality. I guess I was in good company!

AI is a big deal

Although I don’t have access to the attendee survey results, I’d estimate that 80% of the attendees were working in software, of which 80% were working on AI. The general mood was pretty grim – a common conversation topic was “what’s your p(doom)”?

Daniel Kokotajlo, one of the main authors of the AI 2027 report (which predicted that superintelligent AI would be likely be developed by the end of 2027 and then go on to kill all humans) gave a presentation about this report. During the Q&A, when I asked him “What is the most important non-AI thing to be working on?”, he replied, “Prediction markets, to predict AI better.”

I tend to agree that superintelligent AI is likely within the next decade – and even if AI doesn’t become superintelligent soon, it will end up being “merely” as important as the Internet. However, there’s also a good chance (I’d estimate 33% or so, although this is a high variance estimate) that the current AI boom stalls out before reaching superintelligence. So I don’t think it’s worth living as if the world is going to end in 5 years, or neglecting to invest in biology research that may take 20 years to pay off.

Even outside of LessOnline, the Bay Area is really feeling the AI. I haven’t seen these kinds of billboards in Boston, but they’re everywhere around San Francisco:

Biology is cool too I guess

I gave a presentation about my work on meiosis and the research we’re doing at Ovelle to develop a system for growing human eggs from stem cells. This went pretty well, although it was difficult to find a balance between making things understandable for computer scientists who didn’t have bio experience, while at the same time not boring the other biologists who were there.

My friend from the Church lab, Devon Stork, gave a presentation about engineering bacteria to grow on Mars, which was super interesting. (You all should subscribe to his Substack!) I also enjoyed talking with Keoni Gandall about DNA synthesis, with Chase Denecke about gene editing, with Georgia Ray and Gavriel Kleinwaks about challenge trials, with Jeff Kaufman about pandemic prevention and early detection, and with his wife Julia about parenting. And I was glad to learn that my suggestion to Aella about working around her needle-phobia by using a jet injector was a huge success.

(There were a lot more interesting people too – if we talked and I’m not listing your name here, please don’t take offense!)

Other highlights

I learned about Anki and how to make cards using LLMs. Initially I was super excited about this but it turned out that AI-generated Anki cards had more errors than I realized.

Whoever came up with Rationality Cardinality is a genius.

The Fooming Shoggoths were at it again. The quality of the songs was somewhat variable, but I really liked “The Ninth Night of November”, which was about FTX and its tragic downfall.2

On the way home, I captured the essence of the Bay Area in one (slightly out of focus) picture:

I hope to be back next year, assuming AI doesn’t kill us all before then!

Which turned out to be mainly about yeast, Drosophila, and C. elegans meiosis anyway. Very few people actually study meiosis in humans!

This is rather personal for me because the FTX foundation told me on November 3 2022 that they were awarding a grant to fund my meiosis project, and November 10 that they were in trouble, and November 14 that "the funds to support your work are gone with everything else".

Thanks for the shoutout! It was great to see you at LessOnline. Fun event!